Unmasking Deepfakes: Insights from the Latest FinCEN Alert

November 19, 2024

6 minutes read

Knock Knock, it is Deepfake Media at your service!

Do you remember seeing the video of Pope Francis wearing a white puffer coat? Or the bawdy image of Taylor Swift that resulted in an uproar on social media?

Further, this February, a mind-blogging episode occurred when $25 million was paid to the fraudster following the instructions of a Deepfake Chief Financial Officer, over a video call, at a multinational firm in Hong Kong. What to believe and what not has become a question now!

Introduction

It all began in 2023 when a report mentioned an increase of 700% in the fintech deepfake instances. Many subject experts believe all such instances are just the tip of the iceberg, which is alarming for financial regulators across the globe.

Financial institutions (“FIs”) are the backbone of every living, breathing, and flourishing economy. If you want to target the government, its people, and its ecosystem as a whole, take a shot at the FIs of that country. Yes, they are that vital and impactful in unimaginable ways.

The FinCEN, the U.S. Department of the Treasury’s Financial Crimes Enforcement Network (“FinCEN”), has seen a rise in financial institutions reporting suspicious behaviour in the last two years, indicating the possible use of Deepfake media in fraud schemes that target their organizations and clients.

FinCEN again shocked the financial ecosystem by putting out an alert last week titled “FinCEN Alert on Fraud Schemes Involving Deepfake Media Targeting Financial Institutions.” How is this not supposed to pass a wave of shocker when a wing of the government puts out an alert like that yet again?

The crux of this alert is attempting to assist FIs in identifying fraud schemes related to the use of generative artificial intelligence (“GenAI”) techniques to make Deepfake media. The message inter alia had the following broad takeaways:

- Typologies associated with a few of the many fraud schemes floating in the present environment,

- Red flag indicators to assist with identifying and reporting related suspicious activity, Serves as a reminder for the FIs about their reporting requirements under the Bank Secrecy Act (BSA), and

- Provided FIs with information on the opportunities and challenges that may arise from the use of AI.

Furthermore, fraud and cybercrime—two of FinCEN’s National Priorities for Anti-Money Laundering and Countering the Financing of Terrorism (AML/CFT)—are exacerbated by the misuse of deepfake and GenAI media.

So, we are here to take a deep dive into comprehending why FinCEN is so worried about Deepfakes:

What is Deepfake Media?

Before anything, let us first get an understanding of what Deepfake media is.

“Deepfake media, or “deepfakes,” are a type of synthetic content that use artificial intelligence/machine learning to create realistic but inauthentic videos, pictures, audio, and text, mentions the Department of Homeland Security.

According to Deloitte’s Center for Financial Services, fraud losses in the US might increase from US$12.3 billion in 2023 to US$40 billion by 2027 thanks to GenAI, or a 32% compound annual growth rate.

Criminal Activity and Deepfake Media

Criminals utilize cutting-edge, quickly developing technologies, such as GenAI, to reduce the time, money, and resources required to take advantage of financial institutions’ identity verification procedures. The BSA database has come across numerous instances of how criminal organizations are using the Deepfake.

To build false identities, criminals have also started merging GenAI photos with either stolen or completely fabricated personally identifying information (PII). They have effectively created accounts under fictitious names that may have been created using GenAI and then utilized those accounts to obtain and launder money from other fraudulent activities.

Online scams and consumer fraud, such as credit card fraud, authorized push payment fraud, loan fraud, unemployment fraud, and check fraud, are all examples of these fraud schemes. According to BSA reports, criminals have also exploited GenAI-generated identity documents to open bogus accounts and use them as funnel accounts.

The number of crimes committed using these technologies is on the rise each day so there is an urgent need to take measures to curb it.

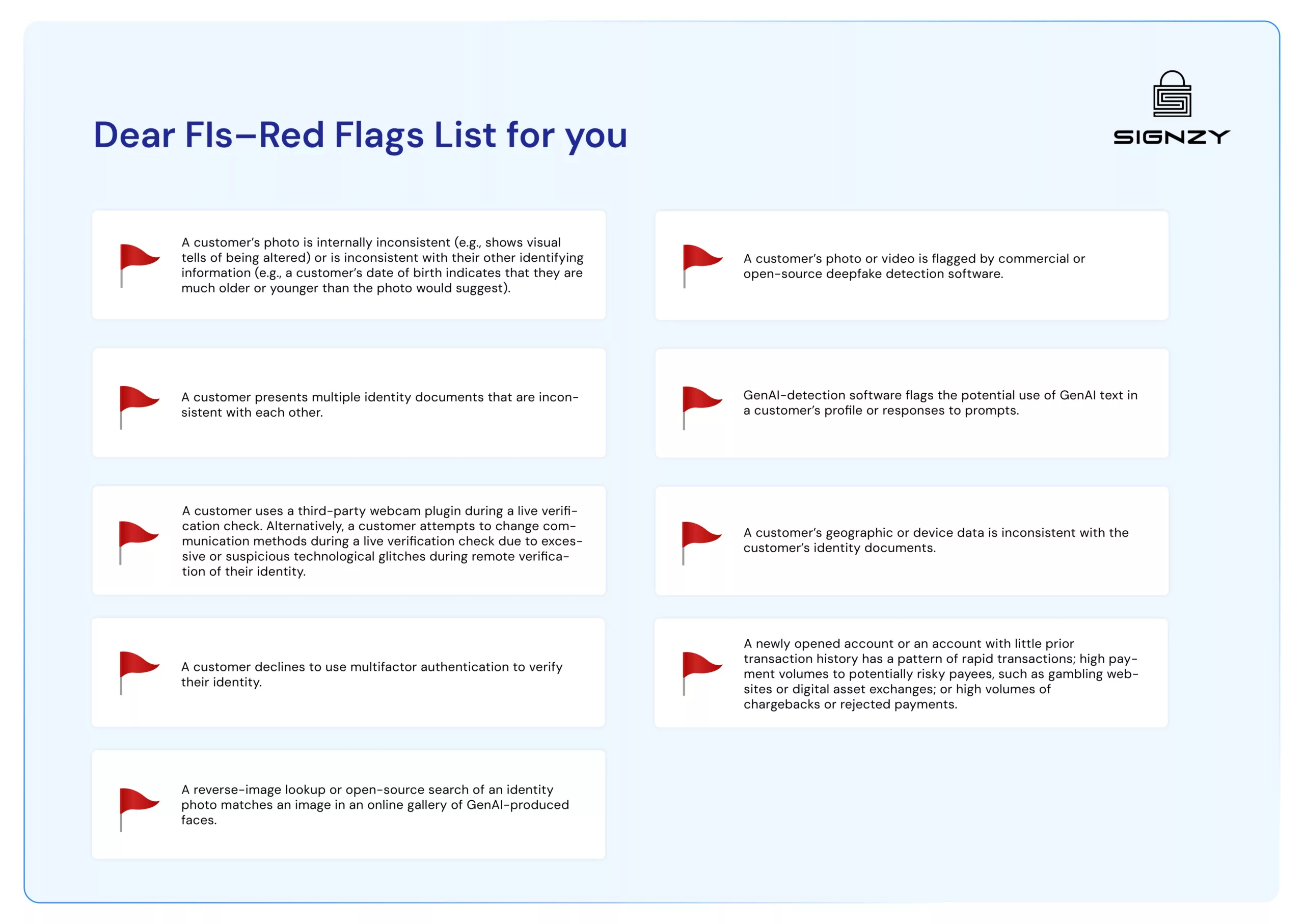

Dear FIs–Red Flags List for you

At Singzy, we firmly believe in offering our clients solutions that are both foolproof and help them expand their businesses while shielding them from scammers. To identify early indicators of Deepfake, you should incorporate or develop systems and programs, as checkpoints, that can detect the red flags in your business model:

Alongside the financial sector experts, the regulators are concentrating on the potential and risks of GenAI. It is a need of the hour to build new industry standards that should be actively developed with the involvement of banks. They can have a record of their systems and procedures ready in case regulators require it by incorporating compliance early in the technology development process.

How can Signzy help you?

Here is a brief of a few of our products that can be useful in effectively dealing with the threats of Deepfake:

- Image and Selfie Deepfake Detection: Detect manipulated images – crucial for KYC and BGV procesesses

- Video and Livestream Deepfake Detection: Analyze spatio-temporal inconsistencies to identify fake video content

- Audio Deepfake Detection: Analyze spatio-temporal inconsistencies to identify fake video content

- One-touch KYC Solution: Secure your voice communications with highly accurate detection model 4. Verify identities seamlessly and secure your entire user journey

Explore Signzy’s Verification Suite ➡️

Conclusion

One of the main issues is the ease of access to the software that can create Deepfake. If stories are to be believed, then there is a whole cottage industry on the dark web that sells scamming software for anything from $20 to hundreds of dollars.

The banking system is the most reliable sector on which everyone is dependent. So, targeting any sector and threatening the banking ecosystem—the latter can be the reason for the downfall of a whole country and eventually the global economy. By 2027, generative AI is predicted to dramatically increase the risk of fraud, which could cost banks and their clients up to $40 billion. Yes, it can be that scary!

“While GenAI holds tremendous potential as a new technology, bad actors are seeking to exploit it to defraud American businesses and consumers, including financial institutions and their customers,” said Director Andrea Gacki. “Vigilance by financial institutions to the use of Deepfakes, and reporting of related suspicious activity will help safeguard the U.S. financial system and protect innocent Americans from the abuse of these tools.”

Let us all be careful and mindful of building an infrastructure that can safeguard our businesses.

Here is the link to the FinCEN alert.